"Apple Intelligence"

And 9 other things worth sharing

This week kicked off with Apple hosting their Worldwide Developers Conference (WWDC). It’s a week long event that kicks off with the company making announcements on its software updates to iOS, iPadOS macOS, watchOS, and their various other entertainment and security solutions.

Apple announced a bunch of updates, but none other more notable than their first foray into artificial intelligence. Now, when I say that, I really mean Generative AI. Apple has used Artificial Intelligence in some form—neural networks, computer vision (OCR) and the works—for almost a decade now. But you could never tell your Mac to rewrite something you wrote, or help you write a sonnet from scratch. And now, you can. Just like ChatGPT.

But the real discussion is Apple’s enduring reputation, penchant even, for a grand entrance. And not just because they execute things well, but because they insist on rebranding anything they put their hands on. For instance, earlier this year, virtual reality became spatial computing (Vision Pro).

Apple Intelligence - A brief introduction

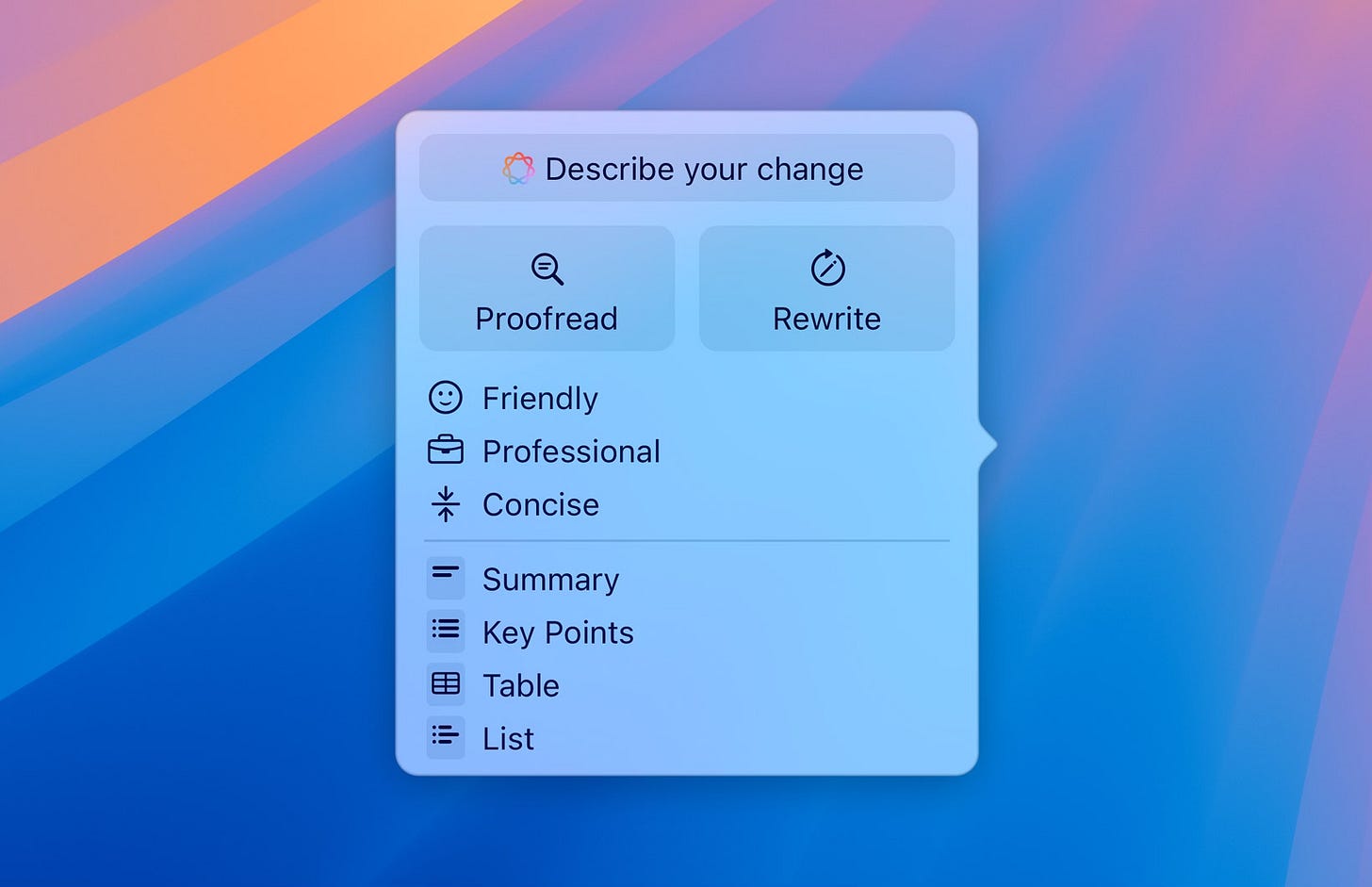

Apple Intelligence is a blanket term for many new AI features coming to Apple’s software offering later this year. Improvements to Siri, notifications, emails, and the debut of Generative AI features are some of the leading examples. To see them all, check out this page. Concerning the performance, there’s no indication that they will be any worse off than they exist already. For instance, they bring rewrite functionality to your apps.

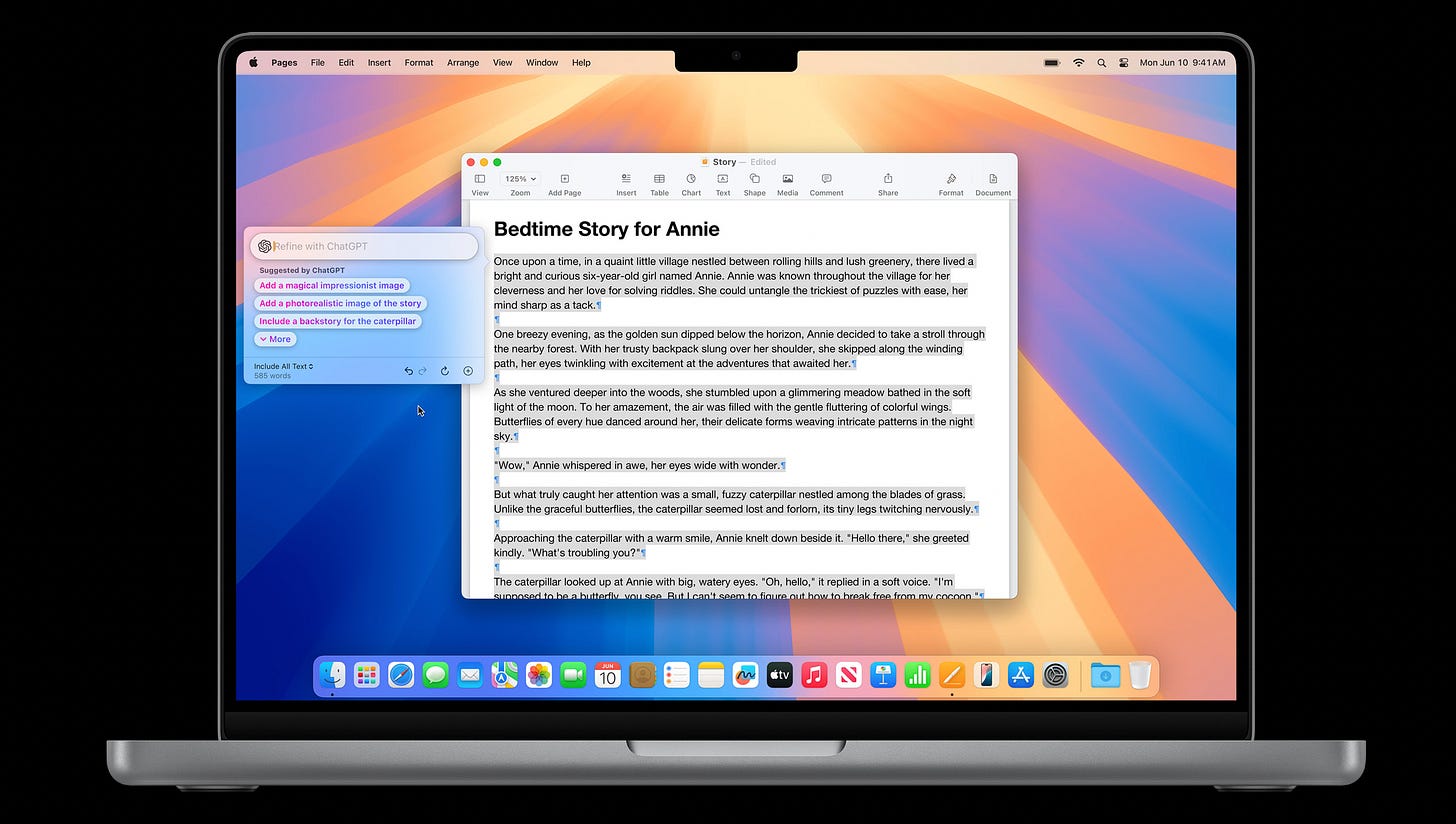

Also, because of a partnership they have with OpenAI, Apple’s latest software will ensure that you won’t need a browser (or a subscription) to use ChatGPT ever again. For iPhone/iPad/Mac users, it’s integrated into all your apps, including most third-party software. And it seems multi-modal (can take in text and image prompts, and produce text and image results).

Apple also went through the trouble of creating an all-new architecture for handling AI requests, built with security in mind.

This is probably the most important aspect of their AI vision: Privacy.

Apple has a reputation (not necessarily a history, but a reputation) of respecting user privacy by shielding their users from the snooping clutches of capitalistic data companies who want to learn their behaviours through personal data. This is a promise that has often been kept to their own detriment even, as Siri arguably would be a much better voice assistant if Apple took advantage of user preferences and data across the over 2 billion active Apple devices out there.

But that’s a story for another day.

In Apple’s version of AI, most prompts will either be handled with on-device computation (meaning your personal info stays on-device and never goes to an external server). And pioneering another security feature, Apple says that in the event that a prompt does need to be processed on an external server, it goes to one that has a private relay network which scrambles your IP, and keeps no record of the information in your prompt.

This is crucial.

OpenAI’s ChatGPT client does not fall under intense scrutiny for privacy concerns mostly because it is 1. Free to use for many people who are just happy to be able to take advantage of the opportunity to use AI at no cost. And 2. Because, it doesn’t really know anything about you. ChatGPT, mostly used via a web browser, is a software interface that only knows what you tell it in your prompt. If the task it is asked to deliver requires the use of personal info, it would either request it, or fill it in with sample info.

Your mobile phone and personal computer, however, know everything about you.

This is the biggest plus, and biggest pitfall, of built-in AI on personal devices.

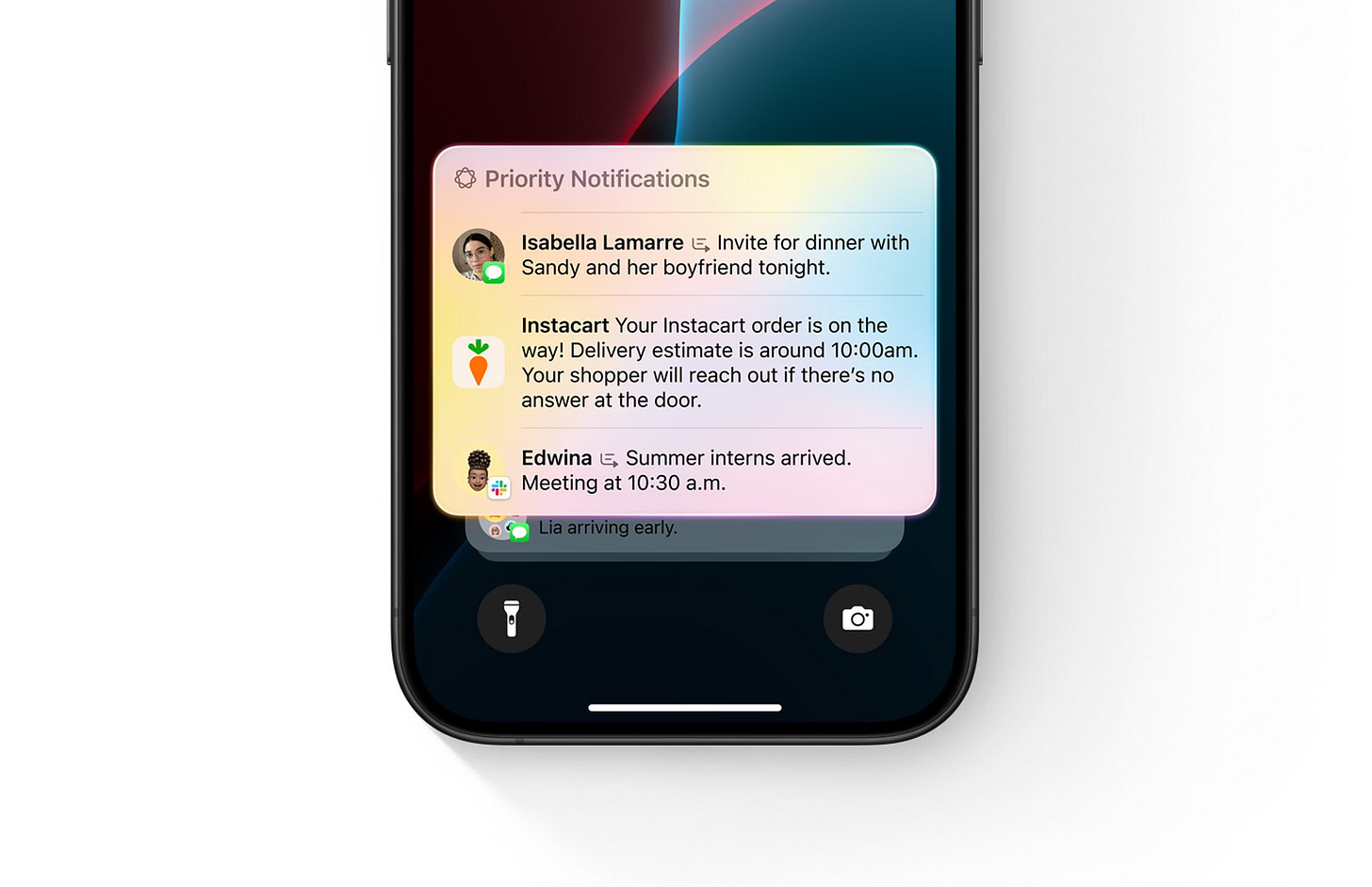

A plus, because it means AI can now help you as an Iron Man Jarvis-esque assistant would; demos show that you can now use AI to automatically input tasks on your calendar that you might’ve forgotten to schedule, simply by asking Siri to go through your messages/inbox and look for so and so. This is wildly more practical for productivity, as it is a realistic use case that ties perfectly with your existing phone use experience. Another example is Priority Notifications, which is basically your device knowing which notifications are from newsletters, promotional platforms, or real people (and even the context of the conversations, so for instance, a task update message from your boss on Slack will get stacked on top of the message where your favourite coworker is simply sending you a meme).

However, the pitfall, which would’ve been the trade-off of privacy to get access to the cloud resources required to process complex prompt requests in real-time, seems to have been mitigated expertly by Apple.

My overarching take on Apple Intelligence will just have to wait until Fall, when I can actually get my hands on it. But so far, it seems like a great plus.

Also, one cannot help but acknowledge the plethora of announced features that are basically recycled from other companies’ efforts in the same space. Take for instance erasing background elements in Photos, which was announced on Google Pixel in early 2023. (Pixel called their’s Magic Eraser). Or the plethora of action functions Siri is expected to receive with Apple Intelligence, which were already a mainstay on Samsung’s virtual assistant, Bixby, or the several text prompt actions like summarise, etc, which were already available on Windows CoPilot.

However, to me, these things don’t matter at all. We knew Apple weren’t pioneers in this space anyway, and since we mostly expect many of these features to be the new normal in the next few years, we are going to be seeing more feature overlap in the coming years from all companies (just like multi-touch screens are a staple for mobile phones now regardless of who pioneered them).

The Real Apple Intelligence

In my opinion, the real Apple Intelligence, is their ability to steer clear of existing stereotypes associated with the technology they are working with, simply by refusing to call it by its birth name.

Regarding Generative AI, for instance, there have been concerns of plagiarism, copyright infringement and extensive debates on the ethics of how Large Language Models are created (they are basically fed the world’s language IP, for free, and regurgitate it at cost that doesn’t compensate the originators of the works used to train the model). There are also raging squabbles concerning AI art and image generation and what place it should have in the future of creativity, productivity and technology. Apple seem to have sidestepped all that by simply refusing to call Apple Intelligence, Artificial Intelligence. Their web page announcing the feature reads:

On a personal note, I have my reservations concerning many features, like Genmoji (allows users to use Gen AI models to create new emojis and stickers) and some other image generation features, which I feel should’ve been left out of the mix for just a little while longer simply because they seem under baked and forced. I get that impression from a lot of what was announced this week, at least with respect to AI. But it’s very understandable. There’s a palpable fear with Apple, it seems, that the industry could move past them if they didn’t act fast. And act fast they did (at least, in for a company of their notoriety).

But it will be interesting to see if even a multi-trillion dollar company like Apple can survive the reputation of Artificial Intelligence, and it will be equally intriguing to see the role they play in establishing it as a mainstay in consumer technology for the foreseeable future. Oh and I’m curious to know who got the short end of this Altman-Cook deal. I guess time will tell.

Over all, Apple Intelligence is perhaps exactly what we expected from Apple: a robust rollout of features for existing use cases that justify their late entry.

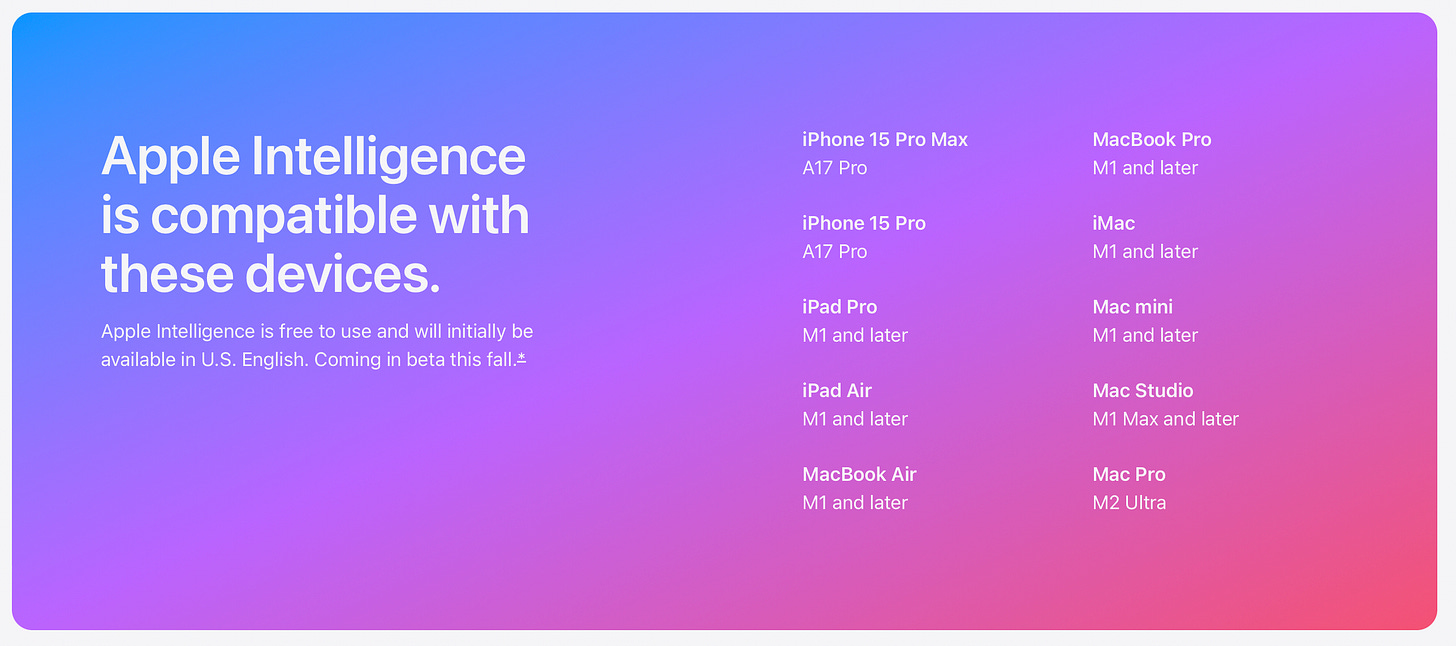

Too bad most users won’t get to use them any time soon:

Nine other things worth sharing:

MKBHD sat down with Apple’s CEO Tim Cook to talk about everything from Artificial Intelligence to Apple’s history with technology. Cook notably says, “Our objective is never to be first. Our objective is to be best”.

You can watch the entire WWDC keynote here. There are announcements about other updates to other Apple software:

Watched The Revenant for the first time. I enjoyed it so much that I went ahead to read this Hollywood Reporter article on The Real Story of ‘The Revenant’ which is significantly different from the version that Hollywood made (of course) but just as intriguing.

I saw Bad Boys 4 in theatres, and enjoyed it enough to want to see Bad Boys 2 again. The latter might be the best of the franchise to be honest.

For all my bredrin suffering from shiny object syndrome just like me:

Another photo from earlier this week that really made me overthink about the concept of overthinking.

Earlier this week, there was this AI video generated by Luma AI that was supposed to be some Ad for like a Monsters animated show. Of course, it was terrible. Although I haven’t read it yet, Jayden Libran, a voice actor and filmmaker, shared a thread on everything wrong with it here. But I think one of the most interesting things about seeing AI do animation is how little attention it pays to physics, which is something that animators cannot afford to ignore if they want their work to come out looking realistic. I think AI needs to read The Illusion of Life: Disney Animation. It was written by two animators at Disney in 1981 (Ollie Johnston and Frank Thomas) and chronicles the 12 principles of animation, all of which AI seems to violate consistently. AI will undoubtedly get better, but until then.

I know it’s probably just a phase but Apple TV+ has some of the best original programming available on television right now. Check out these titles, fgs.

I discovered the poem Ozymandias (by Percy Bysshe Shelley) for the first time this week. Eerie, but inevitable.

Did you just give AI a book recommendation?